LLMs = NASAAP rather than BASAAP!

Came across Steven Shaviro’s thoughts on the critique of AI implicit in Adrian Tchaikovsky‘s excellent “Children of Memory” which I’d also picked up on – of course being Steven it’s much more eloquent and though-provoking so thought I’d paste it here.

The Corvids deny that they are sentient; the actual situation seems to be that sentience inheres in their combined operations, but does not quite exist in either of their brains taken separately. In certain ways, the Corvids in the novel remind me of current AI inventions such as ChatGPT; they emit sentences that are insightful, and quote bits and fragments of human discourse and culture in ways that are entirely apt; but (as with our current level of AI) it is not certain that they actually “understand” what they are doing and saying (of course this depends in part on how we define understanding). Children of Memory is powerful in the way that it raises questions of this sort — ones that are very much apropos in the actual world in terms of the powers and effects of the latest AI — but rejects simplistic pro- and con- answers alike, and instead shows us the difficulty and range of such questions. At one point the Corvids remark that “we know that we don’t think,” and suggests that other organisms’ self-attribution of sentience is nothing more than “a simulation.” But of course, how can you know you do not think without thinking this? and what is the distinction between a powerful simulation and that which it is simulating? None of these questions have obvious answers; the novel gives a better account of their complexity than the other, more straightforward arguments about them have done. (Which is, as far as I am concerned, another example of the speculative heft of science fiction; the questions are posed in such a manner that they resist philosophical resolution, but continue to resonate in their difficulty).

http://www.shaviro.com/Blog/?p=1866

Jason points to a great piece on Large Language Models, ChatGPT etc

“Say that A and B, both fluent speakers of English, are independently stranded on two uninhabited islands. They soon discover that previous visitors to these islands have left behind telegraphs and that they can communicate with each other via an underwater cable. A and B start happily typing messages to each other.

Meanwhile, O, a hyperintelligent deep-sea octopus who is unable to visit or observe the two islands, discovers a way to tap into the underwater cable and listen in on A and B’s conversations. O knows nothing about English initially but is very good at detecting statistical patterns. Over time, O learns to predict with great accuracy how B will respond to each of A’s utterances.

Soon, the octopus enters the conversation and starts impersonating B and replying to A. This ruse works for a while, and A believes that O communicates as both she and B do — with meaning and intent. Then one day A calls out: “I’m being attacked by an angry bear. Help me figure out how to defend myself. I’ve got some sticks.” The octopus, impersonating B, fails to help. How could it succeed? The octopus has no referents, no idea what bears or sticks are. No way to give relevant instructions, like to go grab some coconuts and rope and build a catapult. A is in trouble and feels duped. The octopus is exposed as a fraud.”

https://nymag.com/intelligencer/article/ai-artificial-intelligence-chatbots-emily-m-bender.html via Kottke.org

He goes onto talk about his experiences ‘managing’ a semi-self driving car (I think it might be a Volvo, like I used to own?) where you have to be aware that the thing is an incredible heavy, very fast toddler made of steel, with dunning-kruger-ish marketing promises pasted all over the top of it.

“You can’t ever forget the self-driver is like a 4-year-old kid mimicking the act of driving and isn’t capable of thinking like a human when it needs to. You forget that and you can die.”

That was absolutely my experience of my previous car too.

It was great for long stretches of motorway (freeway) driving in normal conditions, but if it was raining or things got more twisty/rural (which they do in most of the UK quite quickly), you switched it off sharpish.

I’m renting a tesla (I know, I know) for the first time on my next trip to the states. It was a cheap deal, and it’s an EV, and it’s California so I figure why not. I however will not use autopilot I don’t think, having used semi (level 2? 3?) autonomous driving before.

Perhaps there needs to be a ‘self-driving test’ for the humans about to go into partnership with very fast, very heavy semi-autonomous non-human toddlers before they are allowed on the roads with them…

This blog has turned into a Tobias Revell reblog/Stan account, so here’s a link to his nice riff on ChatGPT this week.

“LLMs are like being at the pub with friends, it can say things that sound plausible and true enough and no one really needs to check because who cares?”

Tobias Revell – “BOX090: THE TWEET THAT SANK $100BN”

Ben Terrett was the first person I heard quoting (indirectly) Mitchell & Webb’s notion of ‘Reckons’ – strongly held opinions that are loosely joined to anything factual or directly experienced.

LLMs are massive reckon machines.

Once upon a BERG times, Matt Webb and myself used to get invited to things like FooCamp (MW still does…) and before hand we camped out in the Sierra Nevada, far away from any network connection.

While there we spent a night amongst the giant redwoods, drinking whisky and concocting “things that sound plausible and true enough and no one really needs to check because who cares”.

It was fun.

We didn’t of course then feed those things back into any kind of mainstream discourse or corpus of writings that would inform a web search…

In my last year at Google I worked a little with LaMDA.

The main thing that learned UX and research colleagues investigating how it might be productised seemed clear on was that we have to remind people that these things are incredibly plausible liars.

Moreover, anyone thinking of using it in a product that people should be incredibly cautious.

That Google was “late to market” with a ChatGPT competitor is a feature not a bug as far as I’m concerned. It shouldn’t be treated as an answer machine.

It’s a reckon machine.

And most people outside of the tech industry hypetariat should worry about that.

And what it means for Google’s mission of “Organising the worlds information and making it universally accessible’ – not that Google might be getting Nokia’d.

The impact of a search engine’s results on societies that treat them as scaffolding are the real problem…

Anyway.

My shallow technoptimism will be called into question if I keep going like this so let’s finish on a stupid idea.

British readers of a certain vintage (mine) might recall a TV show called “Call my bluff” – where plausible lying about the meaning of obscure words by charming middlebrow celebrities was rewarded.

Here’s Sir David Attenborough to explain it:

It’s since been kinda remixed into “Would I lie to you” (featuring David Mitchell…) and if you haven’t watched Bob Mortimer’s epic stories from that show – go, now.

Perhaps – as a public service – the BBC and the Turing Institute could bring Call My Bluff back – using the contemporary UK population’s love of a competitive game show format (The Bake Off, Strictly, Taskmaster) to involve them in a adversarial critical network to root out LLMs’ fibs.

The UK then would have a massive trained model as a national asset, rocketing it back to post-Brexit relevance!

I was fortunate to be invited to the wonderful (huge) campus of TU Delft earlier this year to give a talk on “Designing for AI.”

I felt a little bit more of an imposter than usual – as I’d left my role in the field nearly a year ago – but it felt like a nice opportunity to wrap up what I thought I’d learned in the last 6 years at Google Research.

Below is the recording of the talk – and my slides with speaker notes.

I’m very grateful to Phil Van Allen and Wing Man for the invitation and support. Thank you Elisa Giaccardi, Alessandro Bozzon, Dave Murray-Rust and everyone the faculty of industrial design engineering at TU Delft for organising a wonderful event.

The excellent talks of my estimable fellow speakers – Elizabeth Churchill, Caroline Sinders and John can be found on the event site here.

Hello!

This talk is mainly a bunch of work from my recent past – the last 5/6 years at Google Research. There may be some themes connecting the dots I hope! I’ve tried to frame them in relation to a series of metaphors that have helped me engage with the engineering and computer science at play.

I won’t labour the definition of metaphor or why it’s so important in opening up the space of designing AI, especially as there is a great, whole paper about that by Dave Murray-Rust and colleagues! But I thought I would race through some of the metaphors I’ve encountered and used in my work in the past.

The term AI itself is best seen as a metaphor to be translated. John Giannandrea was my “grand boss” at Google and headed up Google Research when I joined. JG’s advice to me years ago still stands me in good stead for most projects in the space…

But the first metaphor I really want to address is that of the Optometrist.

This image of my friend Phil Gyford (thanks Phil!) shows him experiencing something many of us have done – taking an eye test in one of those wonderful steampunk contraptions where the optometrist asks you to stare through different lenses at a chart, while asking “Is it better like this? Or like this?”

This comes from the ‘optometrist’ algorithm work by colleagues in Google Research working with nuclear fusion researchers. The AI system optimising the fusion experiments presents experimental parameter options to a human scientist, in the mode of a eye testing optometrist ‘better like this, or like this?’

For me to calls to mind this famous scene of human-computer interaction: the photo enhancer in Blade Runner.

It makes the human the ineffable intuitive hero, but perhaps masking some of the uncanny superhuman properties of what the machine is doing.

The AIs are magic black boxes, but so are the humans!

Which has lead me in the past to consider such AI-systems as ‘magic boxes’ in larger service design patterns.

How does the human operator ‘call in’ or address the magic box?

How do teams agree it’s ‘magic box’ time?

I think this work is as important as de-mystifying the boxes!

Lais de Almeida – a past colleague at Google Health and before that Deepmind – has looked at just this in terms of the complex interactions in clinical healthcare settings through the lens of service design.

How does an AI system that can outperform human diagnosis (Ie the retinopathy AI from deep mind shown here) work within the expert human dynamics of the team?

My next metaphor might already be familiar to you – the centaur.

[Certainly I’ve talked about it before…!]

If you haven’t come across it:

Gary Kasparov famously took on chess-AI Deep Blue and was defeated (narrowly)

He came away from that encounter with an idea for a new form of chess where teams of humans and AIs played against other teams of humans and AIs… dubbed ‘centaur chess’ or ‘advanced chess’

I first started investigating this metaphorical interaction about 2016 – and around those times it manifested in things like Google’s autocomplete in gmail etc – but of course the LLM revolution has taken centaurs into new territory.

This very recent paper for instance looks at the use of LLMs not only in generating text but then coupling that to other models that can “operate other machines” – ie act based on what is generated in the world, and on the world (on your behalf, hopefully)

And notion of a Human/AI agent team is something I looked into with colleagues in Google Research’s AIUX team for a while – in numerous projects we did under the banner of “Project Lyra”.

Rather than AI systems that a human interacts with e.g. a cloud based assistant as a service – this would be pairing truly-personal AI agents with human owners to work in tandem with tools/surfaces that they both use/interact with.

And I think there is something here to engage with in terms of ‘designing the AI we need’ – being conscious of when we make things that feel like ‘pedal-assist’ bikes, amplifying our abilities and reach vs when we give power over to what political scientist David Runciman has described as the real worry. Rather than AI, “AA” – Artificial Agency.

[nb this is interesting on that idea, also]

We worked with london-based design studio Special Projects on how we might ‘unbox’ and train a personal AI, allowing safe, playful practice space for the human and agent where it could learn preferences and boundaries in ‘co-piloting’ experiences.

For this we looked to techniques of teaching and developing ‘mastery’ to adapt into training kits that would come with your personal AI .

On the ‘pedal-assist’ side of the metaphor, the space of ‘amplification’ I think there is also a question of embodiment in the interaction design and a tool’s “ready-to-hand”-ness. Related to ‘where the action is’ is “where the intelligence is”

In 2016 I was at Google Research, working with a group that was pioneering techniques for on-device AI.

Moving the machine learning models and operations to a device gives great advantages in privacy and performance – but perhaps most notably in energy use.

If you process things ‘where the action is’ rather than firing up a radio to send information back and forth from the cloud, then you save a bunch of battery power…

Clips was a little autonomous camera that has no viewfinder but is trained out of the box to recognise what humans generally like to take pictures of so you can be in the action. The ‘shutter’ button is just that – but also a ‘voting’ button – training the device on what YOU want pictures of.

There is a neural network onboard the Clips initially trained to look for what we think of as ‘great moments’ and capture them.

It had about 3 hours battery life, 120º field of view and can be held, put down on picnic tables, clipped onto backpacks or clothing and is designed so you don’t have to decide to be in the moment or capture it. Crucially – all the photography and processing stays on the device until you decide what to do with it.

This sort of edge AI is important for performance and privacy – but also energy efficiency.

A mesh of situated “Small models loosely joined” is also a very interesting counter narrative to the current massive-model-in-the-cloud orthodoxy.

This from Pete Warden’s blog highlights the ‘difference that makes a difference’ in the physics of this approach!

And I hope you agree addressing the energy usage/GHG-production performance of our work should be part of the design approach.

Another example from around 2016-2017 – the on-device “now playing” functionality that was built into Pixel phones to quickly identify music using recognisers running purely on the phone. Subsequent pixel releases have since leaned on these approaches with dedicated TPUs for on-device AI becoming selling points (as they have for iOS devices too!)

And as we know ourselves we are not just brains – we are bodies… we have cognition all over our body.

Our first shipping AI on-device felt almost akin to these outposts of ‘thinking’ – small, simple, useful reflexes that we can distribute around our cyborg self.

And I think this approach again is a useful counter narrative that can reveal new opportunities – rather than the centralised cloud AI model, we look to intelligence distributed about ourselves and our environment.

A related technique pioneered by the group I worked in at Google is Federated Learning – allowing distributed devices to train privately to their context, but then aggregating that learning to share and improve the models for all while preserving privacy.

This once-semiheretical approach has become widespread practice in the industry since, not just at Google.

My next metaphor builds further on this thought of distributed intelligence – the wonderful octopus!

I have always found this quote from ETH’s Bertrand Meyer inspiring… what if it’s all just knees! No ‘brains’ as such!!!

In Peter Godfrey-Smith’s recent book he explores different models of cognition and consciousness through the lens of the octopus.

What I find fascinating is the distributed, embodied (rather than centralized) model of cognition they appear to have – with most of their ‘brains’ being in their tentacles…

And moving to fiction, specifically SF – this wonderful book by Adrian Tchaikovsky depicts an advanced-race of spacefaring octopi that have three minds that work in concert in each individual. “Three semi-autonomous but interdependent components, an “arm-driven undermind (their Reach, as opposed to the Crown of their central brain or the Guise of their skin)”

I want to focus on the that idea of ‘guise’ from Tchaikovsky’s book – how we might show what a learned system is ‘thinking’ on the surface of interaction.

We worked with Been Kim and Emily Reif in Google research who were investigating interpretability in modest using a technique called Tensor concept activation vectors or TCAVs – allowing subjectivities like ‘adventurousness’ to be trained into a personalised model and then drawn onto a dynamic control surface for search – a constantly reacting ‘guise’ skin that allows a kind of ‘2-player’ game between the human and their agent searching a space together.

We built this prototype in 2018 with Nord Projects.

This is CavCam and CavStudio – more work using TCAVS by Nord Projects again, with Alison Lentz, Alice Moloney and others in Google Research examining how these personalised trained models could become reactive ‘lenses’ for creative photography.

There are some lovely UI touches in this from Nord Projects also: for instance the outline of the shutter button glowing with differing intensity based on the AI confidence.

Finally – the Rubber Duck metaphor!

You may have heard the term ‘rubber duck debugging’? Whereby your solve your problems or escape creative blocks by explaining out-loud to a rubber duck – or in our case in this work from 2020 and my then team in Google Research (AIUX) an AI agent.

We did this through the early stages of covid where we felt keenly the lack of informal dialog in the studio leading to breakthroughs. Could we have LLM-powered agents on hand to help make up for that?

And I think that ‘social’ context for agents in assisting creative work is what’s being highlighted here by the founder of MidJourney, David Holz. They deliberated placed their generative system in the social context of discord to avoid the ‘blank canvas’ problem (as well as supercharge their adoption) [reads quote]

But this latest much-discussed revolution in LLMs and generative AI is still very text based.

What happens if we take the interactions from magic words to magic canvases?

Or better yet multiplayer magic canvases?

There’s lots of exciting work here – and I’d point you (with some bias) towards an old intern colleague of ours – Gerard Serra – working at a startup in Barcelona called “Fermat”

So finally – as I said I don’t work at this as my day job any more!

I work for a company called Lunar Energy that has a mission of electrifying homes, and moving us from dependency on fossil fuels to renewable energy.

We make solar battery systems but also AI software that controls and connects battery systems – to optimise them based on what is happening in context.

For example this recent (September 2022) typhoon warning in Japan where we have a large fleet of batteries controlled by our Gridshare platform.

You can perhaps see in the time-series plot the battery sites ‘anticipating’ the approach of the typhoon and making sure they are charged to provide effective backup to the grid.

And I’m biased of course – but think most of all this is the AI we need to be designing, that helps us at planetary scale – which is why I’m very interested by the recent announcement of the https://antikythera.xyz/ program and where that might also lead institutions like TU Delft for this next crucial decade toward the goals of 2030.

Like a lot of folks, I’ve been messing about with the various AI image generators as they open up.

While at Google I got to play with language model work quite a bit, and we worked on a series of projects looking at AI tools as ‘thought partners’ – but mainly in the space of language with some multimodal components.

As a result perhaps – the things I find myself curious about are not so much the models or the outputs – but the interfaces to these generator systems and the way they might inspire different creative processes.

For instance – Midjourney operates through a discord chat interface – reinforcing perhaps the notion that there is a personage at the other end crafting these things and sending them back to you in a chat. I found a turn-taking dynamic underlines play and iteration – creating an initially addictive experience despite the clunkyness of the UI. It feels like an infinite game. You’re also exposed (whether you like it or not…) to what others are producing – and the prompts they are using to do so.

Dall-e and Stable Diffusion via Dreamstudio have more of a ‘traditional’ tool UI, with a canvas where the prompt is rendered, that the user can tweak with various settings and sliders. It feels (to me) less open-ended – but more tunable, more open to ‘mastery’ as a useful tool.

All three to varying extents resurface prompts and output from fellow users – creating a ‘view-source’ loop for newbies and dilettantes like me.

Gerard Serra – who we were lucky to host as an intern while I was at Google AIUX – has been working on perhaps another possibility for ‘co-working with AI’.

While this is back in the realm of LLMs and language rather than image generation, I am a fan of the approach: creating a shared canvas that humans and AI co-work on. How might this extend to image generator UI?

Got some fun speaking gigs lined up, mainly going to be talking (somewhat obliquely) about my work at Google AI over the last few years and why we need to make centaurs not butlers.

June

August

November

Then I’ll probably shut up again for a few years.

If you (or anyone) still read this you’re probably aware I’ve been banging on about Centaurs for a little while.

I started idly sketching something that could become a shorthand for a ‘centaur’ actor in a system. The kind of visual shorthand that you might often use on whiteboards or in sketches of flows in designing interactive systems.

For example… back in 2006 I sketched this…

My first centaur symbol sketches… was trying to make it something quick and fluid but kept getting hung up on the tail…

I then progressed to subjecting colleagues (thanks Tim) to impromptu lifesize whiteboard centaur sketches…

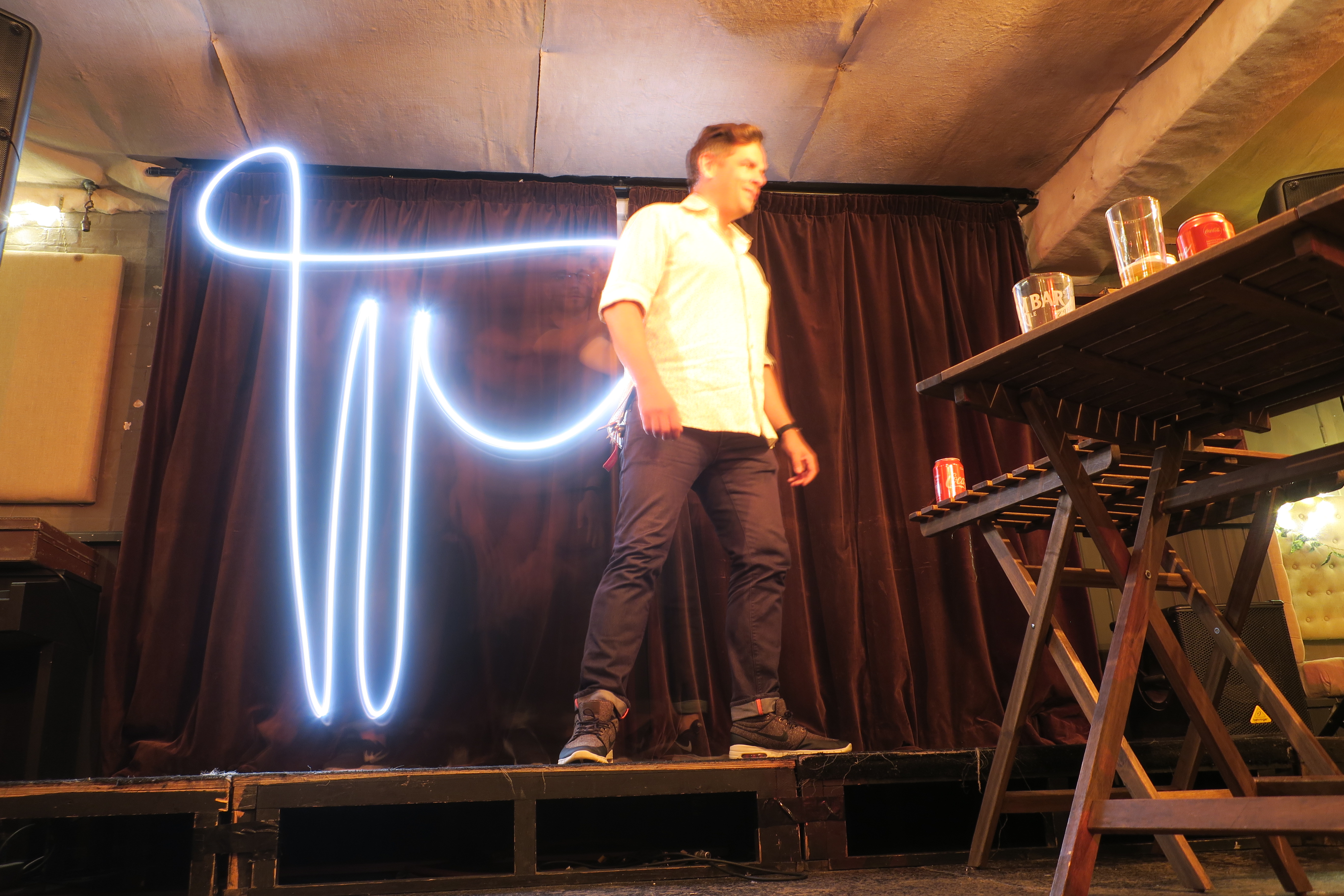

But then I remembered Picasso’s 1949 light paintings of centaurs which inspired me to do some quick long-exposure experiments.

Again, long-suffering colleagues were pressed into service (after buying them some beers…)

And I think that the constraint of having to paint the centaur body in a few seconds of long exposure got me to a more fluid, fluent expression

But then I think in the end it was Nuno who nailed the tail on this old designer…

More centaurs soon, no doubt.

The WSJ published an “explainer” on visual facial recognition technology recently.

They’re to be commended on the clear wording of their intro, and policy on personal/biometric info…

As most people who have known me for any length of time will tell you, unless I’m actively laughing or smiling, most of the time my face looks like I want to murder you.

While this may have had unintended benefits for me in the past – say in negotiations, college crits or design reviews – the advent of pervasive facial recognition and in particular ’emotion detection’ may change that.

“Affective computing” has been around as an academic research topic for decades of course, but as with much in machine intelligence now it’s fast, cheap and going to be everywhere.

I wonder.

How many unintended micro-aggressions will I perpetrate against the machines? What essential-oil mood enhancers will mysteriously be recommended to me? Will my car refuse to let me take manual control?

Perhaps I’ll tell the machines what Joss Weedon/Mark Ruffalo’s Hulk divulges as the source of his powers: