Part 1

No Miracles Necessary in the Chobani Cinematic Universe

I realise I’ve had a set of beliefs.

Some which are (unfortunately, probably) somewhere on the spectrum toward the extreme techno-optimist views espoused since by those with various dubious political views.

That is – despite the patient explanations of various far-cleverer friends of mine – I find I cannot become comfortable with even the most cosy narratives of what has become called “degrowth“.

Perhaps it is easier for me to imagine the end of the world rather than the end of capitalism, but it does rather seem that we missed that already.

We have gone beyond the end of capitalism into techno-feudalism and the beginning era of the Klepts – and are very much in the foothills of The Jackpot – so maybe Mark Fisher’s phrase gets updated with an “and” instead of the “rather than”…

I’m no PPE grad, and my discomfort with degrowth is not all that articulate (and subsequently it will be deconstructed articulately by those who hold it as a TINA of the left) but simply put, the root of my ill-ease with the term is “who does it hurt”.

It just doesn’t feel like the pursuit of degrowth would be any more equitable globally than untrammelled hyper-capitalist growth.

Maybe I’m very wrong – but globally-managed, distributed, equitable degrowth just doesn’t seem plausible.

And the more likely ‘degrowth for me, no growth for thee’ also doesn’t seem like it will fly (or take the train). I guess most of all when I hear the term degrowth I flinch from the point of view of the privilege (including mine) it requires to imagine it.

Why am I putting this lengthy and awkward disclaimer here?

I guess because I am a shamefaced technoptimist – the name of the blog gives that away – but of the fully-automated luxury communism variety (actually I’d plump for semi-automated convivial social democracy, but then I’m also a bit of a centrist dad to add to my sins) and also that I’m not in the DAC/micronukes/fusion camp of extreme VC-led technoptimism around climate.

To be clear before you start an intervention, I’m certainly not in Marc Andreesen’s camp – I think / hope I’m sat at the bar somewhere in between Dave Karpf and Noah Smith.

If anything – I’m in the “No Miracles Needed” camp – by which I refer to Mark Jacobson’s exhaustive book proposing we have everything we need in solar, wind and storage technology to get us through the great filter.

Don’t get me wrong – I’d love fusion to happen – but I’ve been thinking that for about 40 years or more.

Was I the only one to rip the press cuttings of Fleischman and Pons from my teenage bedroom wall with a tear in my eye?

I recently read Arthur Turrell’s “The Star Builders” – and though it protests we are closer than we have ever been – it still seems asymptotically out of reach.

I’m also not waving away the extractive toll off the ‘no miracles necessary’ on the planet, or the regimes and injustices that can be supported by it – though it’s always worth posting this as a reminder.

The recent work by Superflux for the WEF underlines the importance of addressing the many other planetary boundaries and negative impacts on the Earth’s systems that our current Standard Operating Procedures are causing.

And this recent piece in Vice debunking superficial “green growth” (via Dan Hill) is worth a read too

Adam recently reminded me over lunch – even the “No Miracles Necessary” future depends on the not-insubstantial miracle of having a complex world economy and industrial base to manufacture the PV panels, batteries, and turbines.

Again – the first minutes of James Burke’s “Connections” springs to mind in terms of the vertiginous tangle of systems we rely on.

Climate/Earth-System breakdown could put paid to that too.

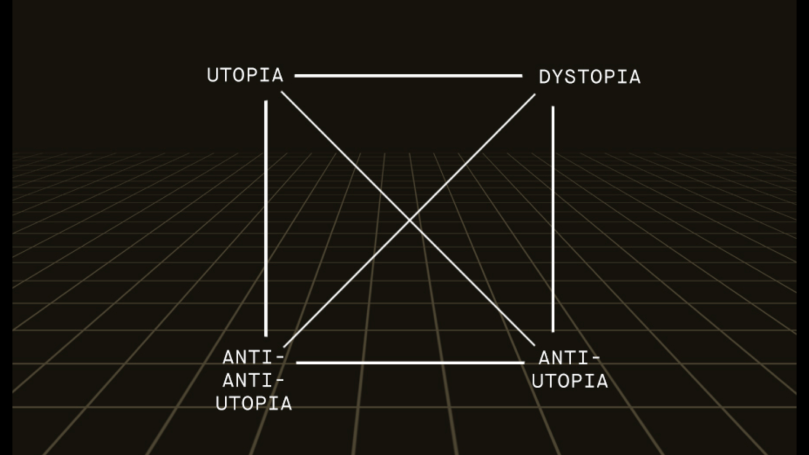

But ultimately – I am is a designer in the technology sector, latterly for the past two years in the energy tech sector – and my view of the designer in that world is to help imagine, illustrate, conceive and communicate the ‘protopia’ or anti-anti-utopia that the ‘no miracles needed’ prescription could lead to (more on this later).

As I said in Oslo, and before/since – my measure of state of that art is not from Hollywood or Cupertino – but a yoghurt company based in New York State.

AHEM.

Yep.

Ultimately, I’m a middle-aged, middle-class white man in the global north clinging on to the fictional technologically-advanced long term futures for humanity that he grew up with – whether they are The Federation, The Culture, The various KSR Mondragonian world-lines or Fully Automated Luxury Communism.

So maybe read the rest of this with that and a pinch of sustainably-sourced salt in mind…

Part 2: I can’t see the other side of the donut from Earth.

I’m sure many of you reading this will have read Kate Raworth’s excellent “Donut Economics” – or at least have a passing acquaintance with the central idea.

That is – we should look to inhabit a zone between resource use to support a social floor of equity and fairness and the planetary boundaries that provide those resources – which describes the diagrammatic donut.

But – what if one of those planetary boundaries is not like the others?

What if one of those planetary boundaries is not quite the same?

If one of those planetary boundaries is not like the others…

Now it’s time to play our game…

The potential renewable energy we could harness as a civilisation is vast.

The solar potential alone is 104 more than we currently need.

That’s before we go beyond “no miracles” tech into fusion.

A Kardashev Type-1 energy system. Capturing the all the energy incident on our planet from our home star.

What happens if we extend one edge of the donut by four orders of magnitude?

What does a Type-1 Donut Economics look like?

(Admission: I cheated a little in the title of this post – a Dyson Sphere would be a Type-2 Civilisation, capturing the entire energy output of The Sun.)

Well – it was 2023, so of course I asked a LLM.

Me: How might one reconcile donut economics and planetary boundaries to economic activity with the notion of Kardashev type one civilisation?

ChatGPT: Reconciling “donut economics” (which focuses on balancing the needs of the environment with the needs of society) and “planetary boundaries” (which identifies the environmental limits within which humanity can safely operate) with the idea of a Kardashev Type I civilization (a civilization that can harness and use all available energy resources on its home planet) is a challenge but not impossible. Here’s a brief reconciliation approach:

- Shared Goal:

- Recognize that both the concepts of donut economics and the Kardashev scale aim for a sustainable, thriving civilization. Donut economics seeks to meet humanity’s basic needs without overshooting our ecological ceiling, and a Type I civilization seeks to maximize energy use in a sustainable manner.

- Redefine Energy Mastery:

- A true Kardashev Type I civilization would not just be about harnessing all energy but doing so sustainably. This means developing energy sources that do not breach our planetary boundaries.

- Technological Advancement:

- Invest in technologies that allow for increased energy utilization without environmental degradation. Examples include highly efficient renewable energy, energy storage solutions, and perhaps even fusion power.

- Circular Economy:

- Align the principles of donut economics with the growth of civilization. This includes designing products for longevity, easy repair, and recycling; minimizing waste; and creating closed-loop systems that mimic natural processes.

- Education & Values:

- Cultivate societal values that prioritize sustainability and the well-being of all its members. By educating the population about the importance of planetary boundaries and sustainable growth, a Type I civilization can emerge that respects these boundaries.

- Integrate Economic and Ecological Thinking:

- Traditional economic models often treat environmental factors as externalities. In moving towards a Kardashev Type I civilization, it’s essential to integrate ecological factors into the very heart of economic models and decision-making processes.

- Global Collaboration:

- A Type I civilization implies global cooperation. This can be seen as an extension of the social foundation in donut economics, where global cooperation helps ensure everyone’s basic needs are met while respecting planetary limits.

- Continuous Monitoring and Feedback:

- Develop and maintain a global monitoring system to ensure that as we approach a Type I civilization status, we do not exceed planetary boundaries. Implement corrective measures when needed.

In essence, becoming a Kardashev Type I civilization while adhering to the principles of donut economics and respecting planetary boundaries means merging advanced technological capacities with deep ecological and social responsibility. It’s a future where we harness the full potential of our planet without harming it, ensuring prosperity and well-being for all.

Me: One of my questions is whether using more energy (so long as it is sustainable, renewable and zero carbon eg solar) is incompatible with donut economics

ChatGPT: Using more energy is not inherently incompatible with donut economics, as long as that energy use aligns with the principles of sustainability and social equity inherent in the donut model.

Let’s break this down:

- Ecological Ceiling:

- The outer ring of the “donut” represents the ecological limits we should not surpass to avoid environmental degradation. Using sustainable, renewable, and zero-carbon energy sources like solar helps ensure we do not exceed this ecological ceiling.

- Social Foundation:

- The inner ring of the “donut” signifies the social basics that every individual should have access to, such as food, water, health, education, etc. Using more renewable energy can support these goals if it’s done in a way that ensures equitable distribution and accessibility. For instance, renewable energy can power schools in off-grid areas, provide clean cooking solutions, or pump clean water, thereby aiding in achieving the social foundation.

- Growth vs. Development:

- It’s essential to differentiate between growth for the sake of growth (which can be detrimental) and genuine development that meets human needs without compromising the environment. Using more energy is acceptable if it’s channelled towards genuine development and not just consumption for consumption’s sake.

- Efficiency and Resilience:

- While using more renewable energy, it’s crucial to prioritize efficiency. This means optimizing energy consumption to get the most benefit out of every unit of energy and designing systems that are resilient to potential shocks.

- Holistic Well-being:

- A significant point in donut economics is that endless economic growth (usually measured as GDP growth) is not the ultimate goal. Instead, the objective is holistic well-being. If using more energy contributes to holistic well-being without causing ecological harm, it aligns with the principles of donut economics.

In summary, using more energy in and of itself is not contrary to the principles of donut economics. However, it’s the how and why of that energy use that matters. If the energy is sourced sustainably, used efficiently, directed towards genuine development, and contributes to holistic well-being without overshooting ecological limits or leaving anyone behind, then it aligns with the philosophy of donut economics.

Me: But could you see a route to growth or new capabilities for a global civilisation due to energy use that is orders of magnitude higher than now, so long as it is zero carbon (eg solar, fusion) – would that be an enlarged donut?

ChatGPT: Certainly, there’s a potential route to growth and new capabilities for a global civilization through a significant increase in energy use, as long as it’s sustainable and zero-carbon.

This could, metaphorically speaking, result in an “enlarged donut,” where the scale and capabilities of civilization expand while still maintaining the balance between ecological limits and social needs.

- Technological Advancement:

- A significant increase in energy availability, especially from sustainable sources like solar or fusion, can fuel technological advancements in various sectors, from transportation and infrastructure to research and space exploration. This could lead to breakthroughs we haven’t yet imagined.

- Space Exploration and Colonization:

- With energy availability orders of magnitude higher than now, humanity could potentially undertake large-scale space missions, colonizing other celestial bodies, mining asteroids, or even reaching for interstellar travel.

- Global Infrastructure Projects:

- Massive energy availability could facilitate projects that benefit humanity globally, like global transportation networks, large-scale desalination plants for potable water, or projects that counteract climate change effects.

- Elevated Living Standards Globally:

- A tremendous amount of sustainable energy could enable the global provision of high living standards, including universal access to healthcare, education, and other public goods, leading to an expanded social foundation in the donut.

- New Economic Models:

- Such energy abundance could challenge traditional economic models and give rise to new ways of value creation, distribution, and consumption, leading to more equitable and sustainable economic systems.

- Ecological Restoration:

- Excess energy can be employed in large-scale ecological restoration projects, actively reversing some of the damage done to the planet, from reforestation to ocean cleanup.

However, there are considerations:

- Physical Limits:

- Even if the energy is zero-carbon, there are still physical limits to consider, such as land use, resource extraction for technology, and potential waste from energy infrastructure.

- Equity and Governance:

- An abundance of energy could exacerbate inequality if not distributed equitably. Robust governance and frameworks would be necessary to ensure that the benefits of such energy abundance are shared widely.

- Cultural and Social Adaptation:

- Rapid technological and infrastructure changes fueled by energy abundance would require cultural and social adaptations, making it essential to consider the well-being and adaptability of communities.

- Holistic Well-being:

- As mentioned before, the end goal shouldn’t be growth for growth’s sake but holistic well-being. An enlarged donut should still maintain this perspective.

In essence, an “enlarged donut” resulting from significant energy growth can be envisioned, where the scale of human capabilities and infrastructure grows but remains within a sustainable and equitable framework. The key is to ensure that this growth enhances the well-being of all without compromising the planet’s ecological integrity.

I mean, that’s pretty good, right? It turns out GPT stands for “Green Plenty Technologies”?

Of course all the caveats around GenAI apply – including the confirmation bias that is baked in to such a dialog.

But!

It’s interesting to me as a starting point for some good old-fashioned human thinking around this stuff. I’m curious if the various bodies that have taken up the mantle of Donut Economics are looking at “Radically Expanded Donuts”… R.E.D. = Green???

One thing that I caught from the dialog was the idea I think I first encountered from Deb Chachra’s newsletter – that we are encouraged to think about energy efficiency over material efficiency, when in fact energy is practically infinite in supply and matter is not.

We find it very hard to think in these terms – if you’re my age, we’ve been encouraged to turn off lights, turn down the heating since our childhoods…

And it’s not that energy efficiency is a bad thing, far from it – pursuing technologies of energy efficiency in a Type-1 world will just extend the headroom of the donut – but much of it comes from a place of considering energy as something produced by the combustion of finite matter.

We should add to that the externalities of energy use, particularly heat – and moving to a NMN world of full electrification would not remove that exhaust heat production but would hugely mitigate against it.

I’ll also hope that acts as a bulwark against wasteful crypto bullshit… Abhorring waste and scams are not the same as imagining beyond energy penury.

Our “imaginaries” are constrained by what we imagine the planetary boundaries are – which I think partly leads to my dissatisfaction with degrowth narratives – and so, perhaps, designers can step in to help construct new ones.

I’m not sure what they might be.

I have thoughts of course (see later *)

Genres such as Solarpunk are not yet mainstream – even within the discourse of those familiar with or exploring how to enact Donut Economics (please correct me if I’m wrong here!!!).

But – I’m also not sure it’s for me to create those imaginaries.

Which leads me nicely to the brief I put this spring of 2024 to the students at Goldsmiths Design.

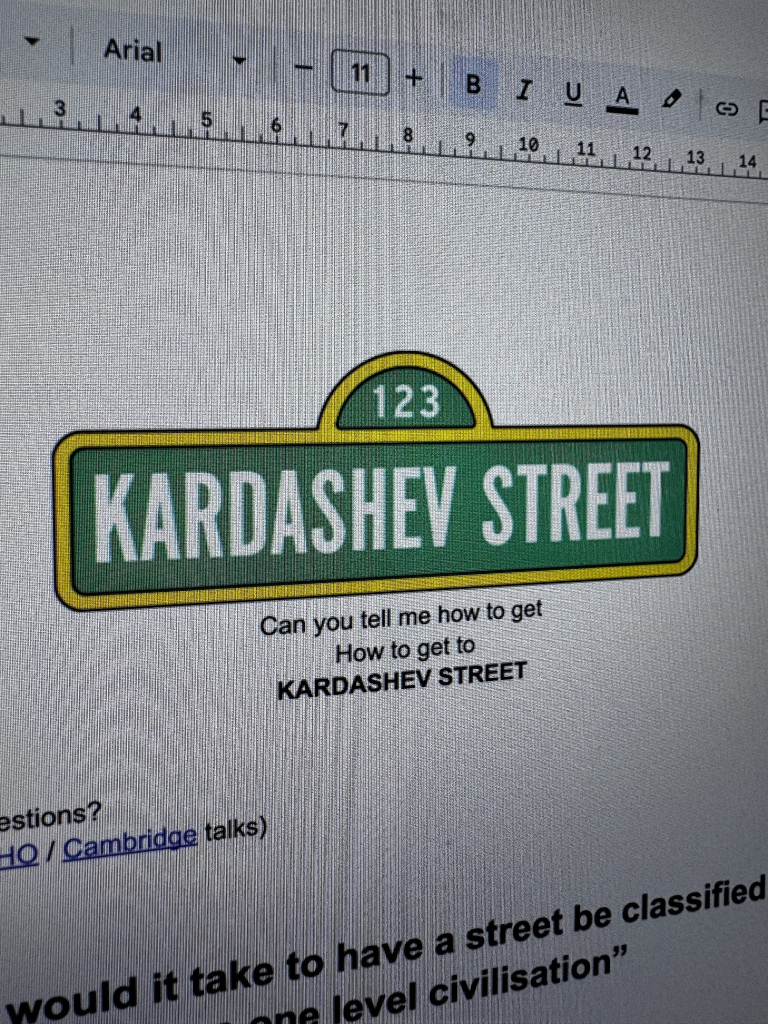

Part 3: Can you tell me how to get, how to get to Kardashev Street?

I was very pleased to be asked by Goldsmiths Design to help set an “industry brief” for the second year students in their spring term.

When I got together with the team there at the end of 2023, this obsession with the “Radically Expanded Donut” was in full flight and so it was somewhat unloaded on them at an evening at The New Cross House.

The conversation led from the notion of a Kardashev Type-1 planet, to what it would mean anchored in place and routine of daily life.

We started to flesh that out.

What would a Kardashev Type-1 Street be like to live in?

To move into? To move out of? To live in the next street along, that had not yet realised it’s Type-1 potential for whatever reason?

How do you start that process, or encourage others to do so?

What interconnections, relationships and tensions might arise?

What institutions (financial or otherwise) and services would need to be invited and sustained to support it? What might be the equivalent of the mutual institutions born out of the Industrial Revolution for an equally revolutionary equitable NMN transition?

How does it connect to the transition design thinking pursued by Cameron Tonkinwise et al. The “Planetary Civics” of Indy Johar et al?

And of course – Kate Raworth’s “Donut Economics” itself.

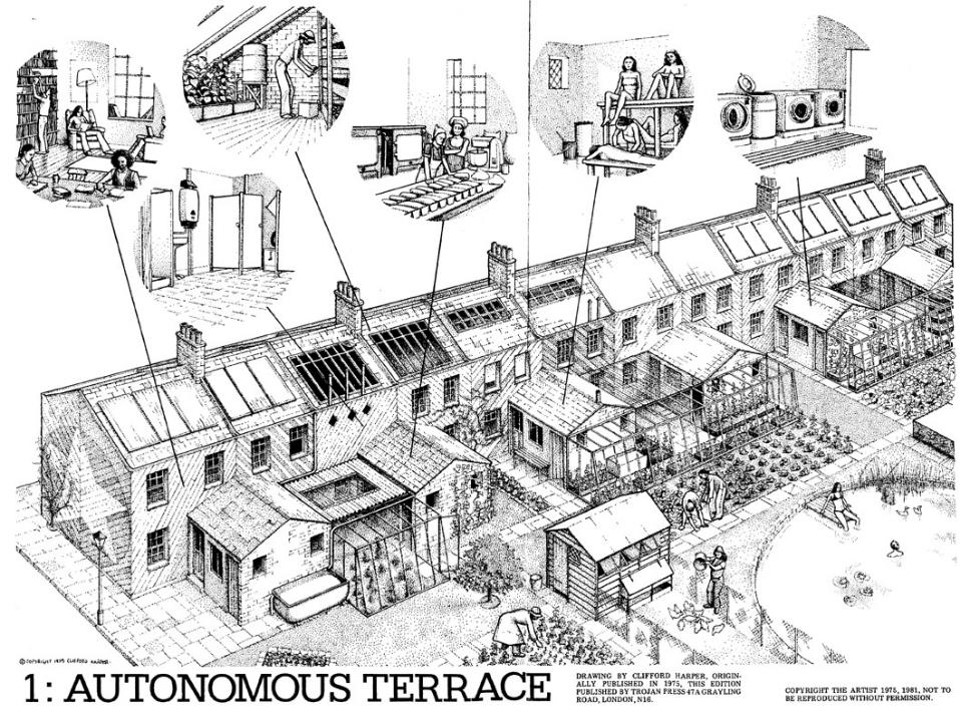

My mind went to the imagery of Clifford Harper in 1974’s Radical Technology – and the writing decades later of Adam Greenfield in his book “Radical technologies” and also his thoughts on the “convivial stack”.

How might the NMN technologies be procured, shared and maintained by a community in place – say the terraced houses typical in the UK?

My mind went to the story of the street in Walthamstow, London trying to create it’s own shared solar energy infrastructure– and the barriers to that created by the commercial/regulatory status quo.

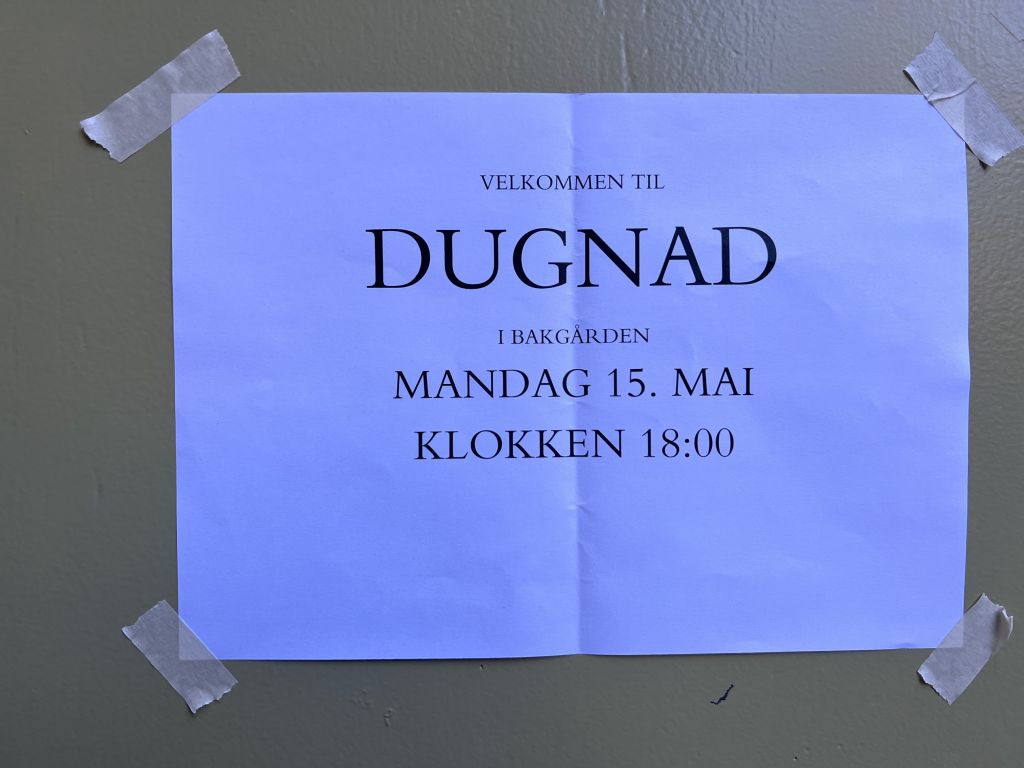

In Oslo back in May 2023, we’d been made familiar with the custom of “dugnad“, where the occupants of a apartment block work together to maintain their common infrastructure and spaces.

What rituals and expectations might be born on a Kardashev Type-1 street. How would they be transmitted both across communities, cultures and generations?

The students wrestled with the brief admirably – and I’ll post some of the outcomes here soon if I can.

* Part 4 / KardaCoda or Willfully-generative human with no undo vs GenAI

Finally – when asking ChatGPT about all this back in the autumn of 2023, it declined to create a diagram of the Radically Expanded Donut for me.

What it did (kinda) produce was a brief:

I’m sorry, I cannot create drawings directly. However, I can describe how to visualize or design such a diagram based on the concept:

- Inner Circle – Social Foundation:

- This remains as the core of the donut, representing essential human needs.

- You might want to expand this circle to show a broader range of social amenities, reflecting the increased capabilities of a civilization with massive energy resources. This could include advanced education, universal healthcare, enhanced connectivity, etc.

- Outer Circle – Ecological Ceiling:

- This circle might be farther out than in the traditional donut, reflecting the greater capabilities and infrastructure of an advanced civilization.

- Despite the enlargement, it would still emphasize sustainability and the need to avoid overshooting ecological limits. This means not surpassing boundaries like land use change, freshwater use, or other ecological parameters.

- Space Between The Circles – The Safe and Just Space:

- This area, which represents the space in which humanity can thrive sustainably, would be considerably broader.

- You can divide this space into segments, each representing different sectors fueled by the energy abundance: technological advancements, space exploration, global infrastructure, etc.

- Additional Elements:

- Consider adding visuals that represent the vast energy sources (solar arrays, fusion reactors, etc.) around the donut.

- Depict global collaboration symbols, equity symbols, and other elements that emphasize the importance of shared benefits and governance.

To create this diagram, you could use graphic design software like Adobe Illustrator, Canva, or even simpler tools like PowerPoint. Once you have a clear mental image or sketch, you can work on refining it digitally. If you’re not comfortable creating it yourself, you might want to share the concept with a graphic designer who can bring it to life.

This set me off on the quixotic path of being the slow human illustrator of the GenAI’s “imagination”.

I started sketching. I ordered and awaited the delivery of art materials – including the first airbrush and frisk film I’d possessed since the early 1990s.

I waited for coats of ink and acrylic to dry.

I messed things up and tried to fix them/flip them into analog, material ‘beautiful oopses’ in the absence of the undo function.

It took time.

I got ink under my fingernails.

I had fun.

I didn’t annotate with the things I thought I might.

A technoptimist litany – fusion, air mining, atmospheric carbon removal, desalination, detoxicifcation, open source spime-like fabrication of tools and shelter, universal healthcare, universal basic income, another green food revolution via precision fermentation, soil renewal etc.

Instead, more of an obscure mandala to magic forth the Kardashev Type 1 future.

Instead, Kirby dots, glow-in-the dark and gold metallic acrylic detailing – and two scrawled ink numbers: 104 for the energy potential of the Radically Expanded Donut – and 107 for the 10 Billion people it would hopefully support within the equitable, convivial zone it describes.

I also kind-of ended up making a cosmic goatse, but hey.

Acknowledgments

I’m grateful for the conversations I’ve had with Carolyn and Arjun – the tutors and of course Matt Ward for inviting me in.

This post has also been greatly influenced by conversations with Adam Greenfield, Deb Chachra, Dan Hill, Celia Romaniuk and of course, Matt Webb.

The talk and workshop I was invited to give last year by Mosse Sjaastad of AHO, Fredrik Matheson and IxDA Oslo were also a big starting point.